Recently, engineers at the University of Pennsylvania recently created the first reprogrammable photonic chip that can train a type of neural network with pulses of light instead of electricity. Neural networks are most appropriate for training on large datasets, and excel in procedures that are utilized by models most frequently today like image classification and natural language processing. Neural networks are so common now, that models like ChatGPT and DeepSeek are both built on NNs. While these NNs are undoubtedly useful, the hardware to make them run at the speed that they do requires massive amounts of electricity, not only to run queries, but also to cool. With millions of user queries per second, data centers must cool the heat generated by vast numbers of processors.

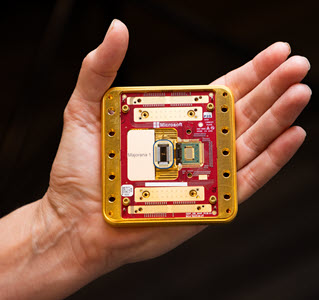

Photonic chips can be used to mitigate this issue as they utilize light instead of electricity that generates heat. In a photonic neural processor, light is directed through intricate on-chip circuits by optical resonators and waveguides, where computations like matrix multiplication are carried out at the speed of light and without any resistive losses. The University of Pennsylvania design employs a novel nonlinear optical material: an input data is encoded by a beam of light, and a second “pump” beam dynamically modifies the material’s refractive index to implement activation functions. Because photons don’t generate heat in the same way electrons do, photonic processors run cooler, eliminating bulky heat sinks and reducing or even removing power-hungry cooling systems.Reduction in waste heat translates to data centers lowering their cooling footprint, lowering their water or refrigerant usage, and reducing their carbon footprint. As AI workloads continue to increase, these savings accumulate, offering a way to sustainable training of ever-larger models.

Beyond training, photonic technologies are also revolutionizing inference and data transport. Silicon photonic interconnects already began displacing copper links in hyperscale centers, carrying terabytes of information per second with far less loss of energy. Researchers are developing fully integrated photonic memory and neuromorphic photonic circuits that would be capable of executing complete inference pipelines on light alone, significantly lowering the overhead of transferring data between electronic processors. Together, these advancements constitute a cohesive “all-optical” AI architecture that minimizes both computational and communication energy consumption.

The impact is felt at the edge as well. As photonic accelerators reduce size and cost, they could be incorporated into phones or even self-driving cars enabling on-device AI with significantly lower power consumption and heat dispulsion than today’s microelectronic chips. Offloading inference from centralized data centers to edge nodes distributed across the network would reduce network-level energy consumption, as well as latency. Meanwhile, new optical cooling concepts like laser-driven solid-state heat pumps are being investigated to create fan-less and water-loop-free self-cooled photonic modules.

As global demand for AI services continues its climb, sustainable computing has become more and more needed. By using photons instead of electrons for both core computation and high-bandwidth interconnects, light-based NNs offer an attractive path to reduce electricity usage, cooling infrastructures, and carbon footprints.